Don't Write Evals for Fast-Moving Systems

You're developing an LLM-powered system. It's moving fast. Should you write evals? Not yet.

25 Jan, 2026 - 03 Mins read

I’ve been using Claude Code for personal productivity in personal knowledge management (PKM) and idea productionizing (into presentations, Linkedin posts, etc.). The main challenge has been making the right context available in plain text, and making the relevant abilities available as CLI scripts. Everything else follows from the strength of the underlying LLM.

I made four experiments:

The goal here was to integrate the following sources of information into a single, searchable, and queryable knowledge base. Together, these sources cover 90% of my daily personal knowledge work:

mbsync and indexed/searched with notmuch),I stored Rosebud and MS To-Do exports in SQLite databases, which I could then query with Claude Code. The purpose of this was to bring all the disparate “exhaust fumes” of my cognition into a single place, and to be able to query it with Claude Code.

I then created the following Claude Code skills, augmented with scripts for deterministic retrieval of the data. Here are my favorite skills:

I plan to iterate on these skills over time, and to add more skills as I need them. The most important work here is having found a way to provide my mental context to Claude Code in a way that is both reliable and scalable.

I like preparing presentations as a list of bullet points, which I then convert to a presentation. I used to do this manually, with a storyboard and the whole nine yards, which took ages. I was curious if I could short-circuit the process with AI.

My recent presentations were made with Gamma, sometimes with a happy assist from Napkin AI.

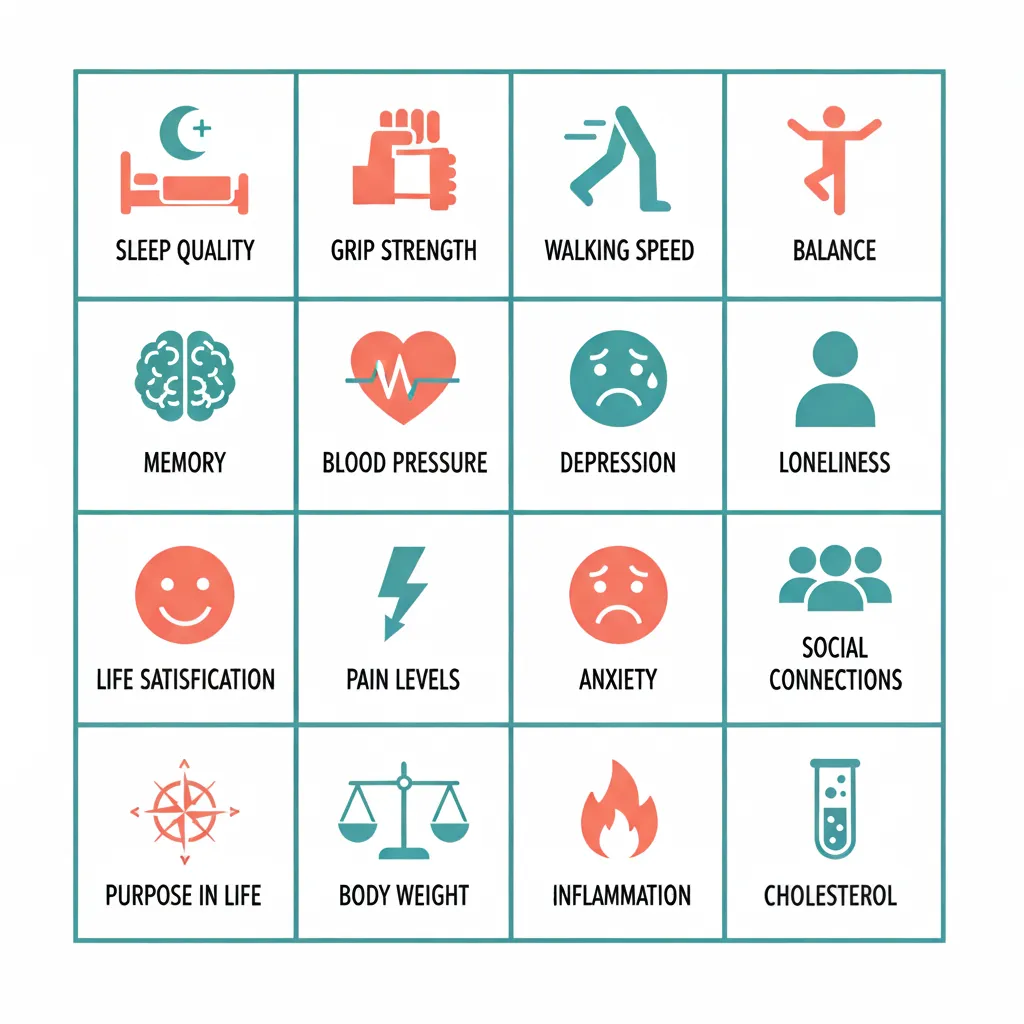

The goal here was to help my partner generate games for each academic paper that her journal club discusses1, along with the corresponding printable materials.

CLAUDE.md.Here’s a screenshot of an Outcome Bingo game generated by Nano Banana based on rules of a game produced by Claude Code. “Life Satisfication” is a persistent bug that I find endearing for its Wicked-like verbiage.

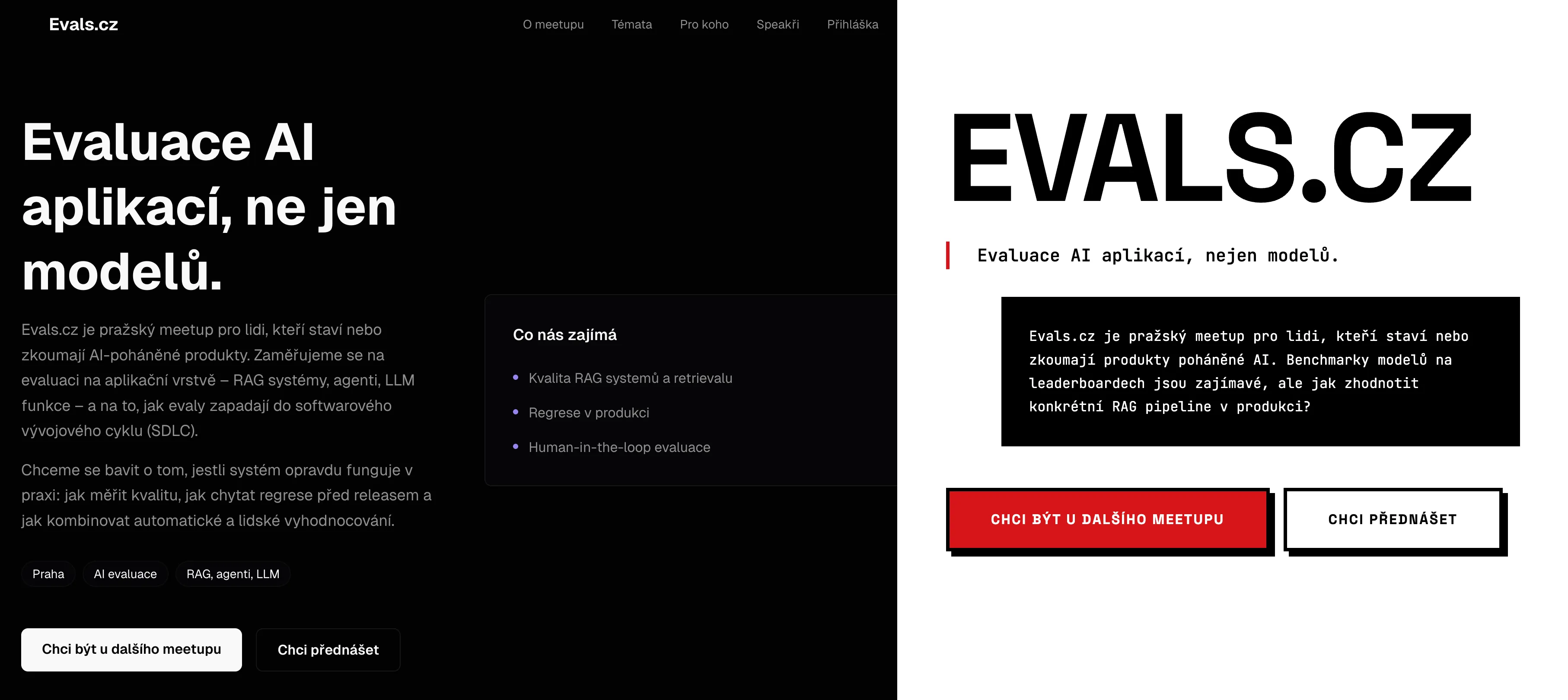

This one is a bit of a post-script. I already knew that Claude Code would do great at generating a website, but I was curious how it would fare against a dedicate website builder like v0.dev.

I used Claude and ChatGPT to generate copy for the website, with slight manual tweaks, and then passed that onto v0.dev to populate the website with it. The result was a… fairly standard vibe-coded website.

I then told Claude Code to look at the website and generate something with a “more original” design. It did! I don’t think I’ve seen a brutalist website before.

v0 output on the left, Claude Code output on the right:

See the full evals.cz website here..

Notably, Claude Code has run into problems running the npx create-x scaffolding scripts, and had to be stopped from generating the scaffolding by itself. While not a big deal — the scaffold would probably have been okay — there’s no need to take that risk when it can start from a verified clean-slate template.

I think I’ve taken away several tricks:

uv virtual environment for each skill (and since notmuch Python bindings are installed in the system Python, this has led to some fun errors). Mostly, though, it’s been able to recover from these errors by itself. Not sure more hand-holding would have helped.The journal club is a group of students who meet to discuss academic papers, typically in a structured format. ↩

You're developing an LLM-powered system. It's moving fast. Should you write evals? Not yet.

25 Jan, 2026 - 03 Mins read

Here's the Obsidian/Claude Code setup in more detail, including the data sources and the skills I built.

08 Jan, 2026 - 07 Mins read

Have a question, an idea, or just want to say hello?